This is part 2 of my D&D Tabletop Tracking System project. If you haven’t yet, you might want to read part 1 to catch up!.

The following post will be written with the project state as of November 2024.

Demo First!

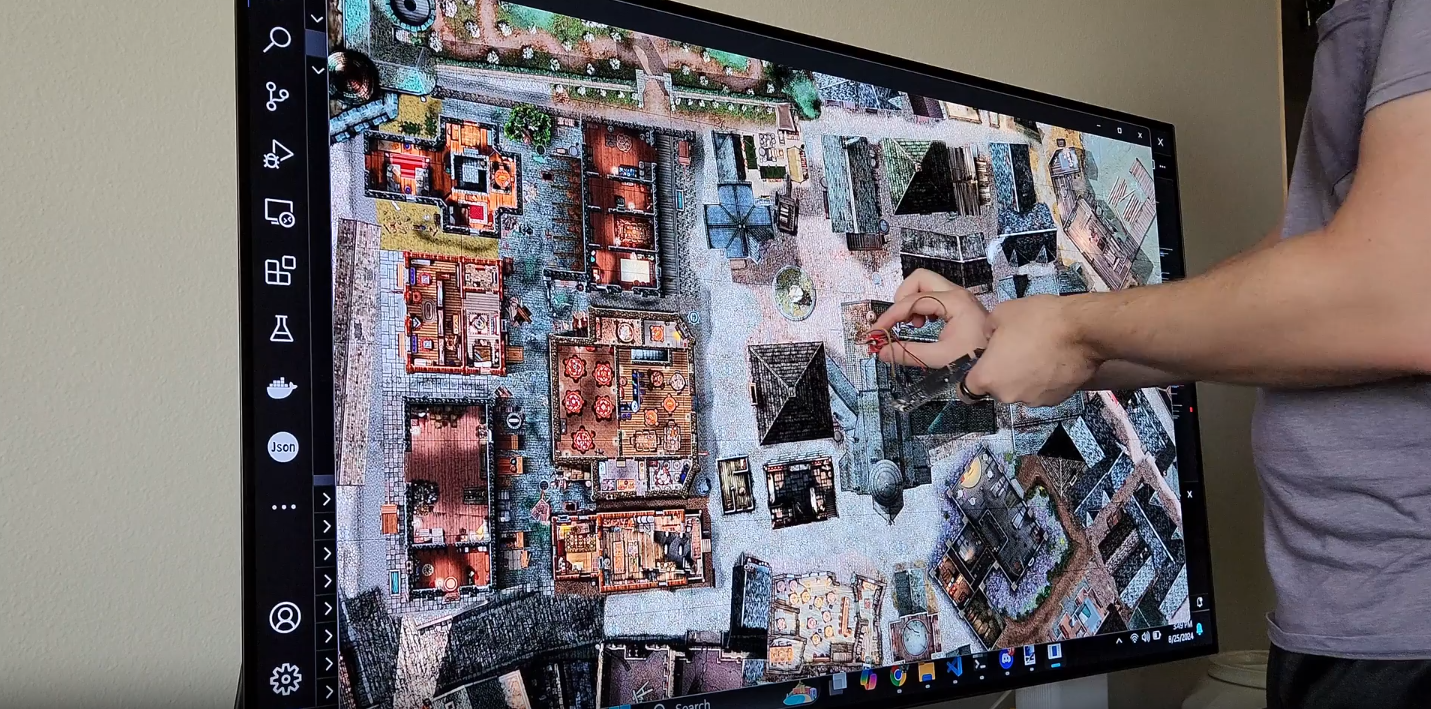

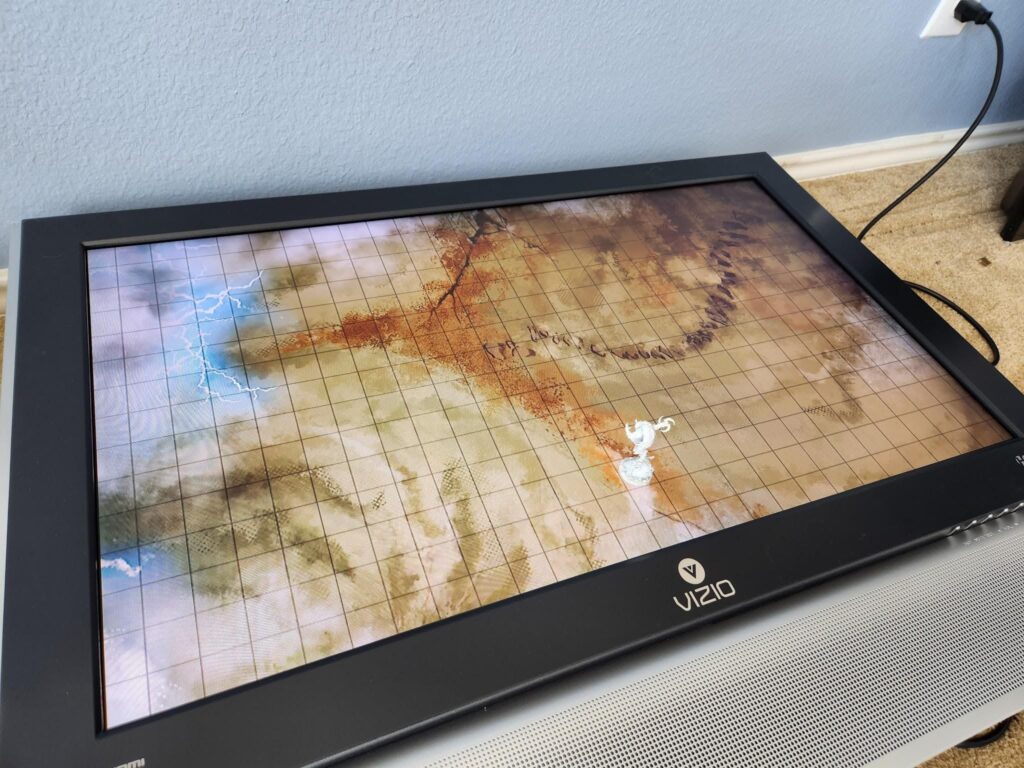

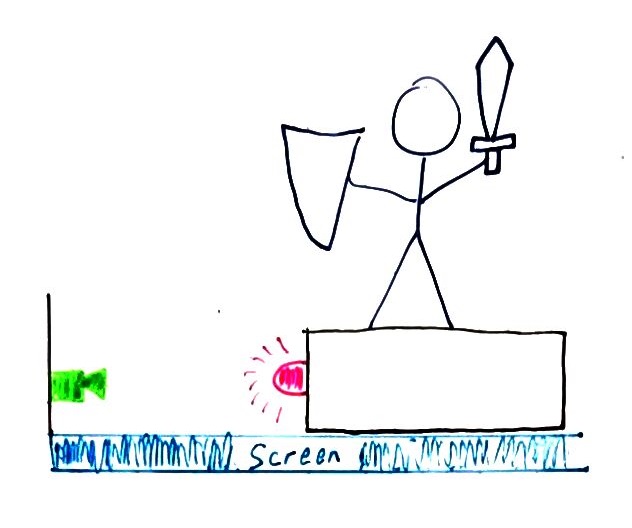

Shown in this demo is a program on the target microcontroller (an nRF52) with a light sensor (not quite the one I intend to use, but similar enough) running on battery power which can locate itself on the map. If you watch carefully, you can see the screen flash a sort of grid which is visible as patches of differing brightness levels. For demonstration purposes, the flashing is much slower than the goal, which is to flash on each frame (~33ms per flash). Once the light sensor receives a location by watching for the flashes, a green box is drawn around it to show where the system thinks it is.

Introduction

For this post, I wanted to discuss the design constraints I’ve been working under, the hardware so far, some certification stuff that I had not considered, and an unexpected hard problem. I’ve received a lot of suggestions while working on this project which has been extremely helpful, but since others don’t know the reason for some of my decisions I’ve had to reject some input. My hope is that communicating what design constraints I’ve been using and why will help get everyone on the same page.

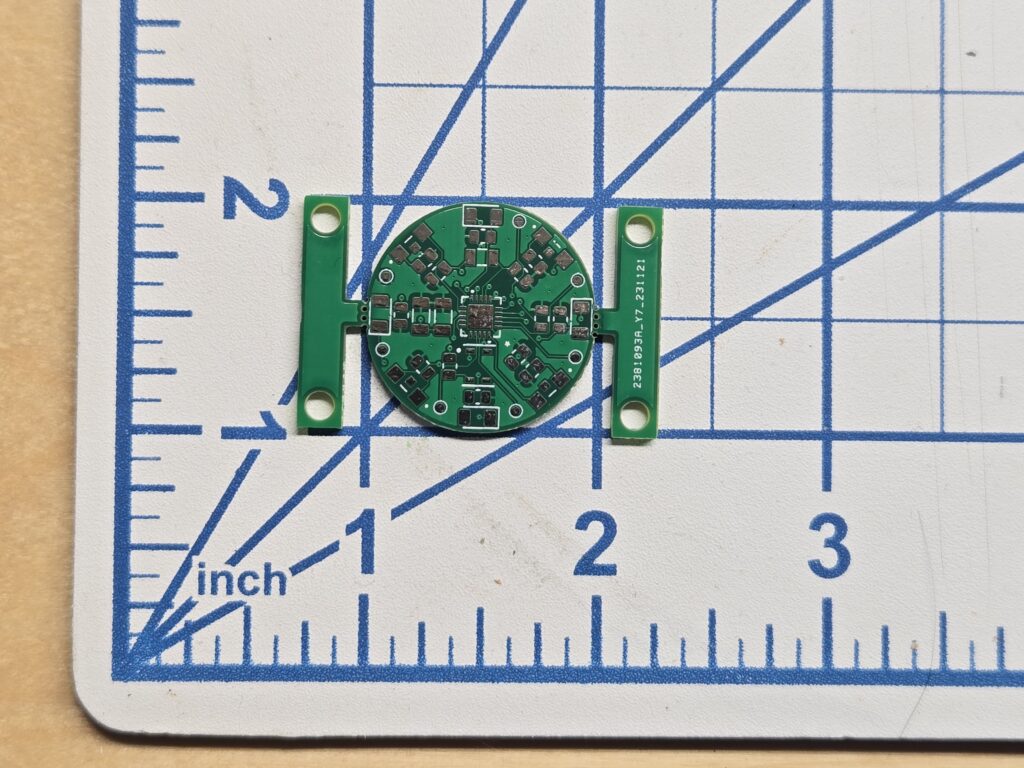

Since my last update, I have continued to work on this project and am even close to sending it out for manufacturing! Hopefully, I will soon get physical copies with all the sensors and microcontrollers integrated into an approximately 1-inch diameter printed circuit board.

Design Constraints

While working on this, I have been using the following constraints to guide my solution:

- Minimal External Hardware

- Size

- Power

- Cost

I treat these as goal/guiding principles, so if there is a reason to violate/adjust them, that is ok. At the end of the day, this is a “for fun” project, but I’ve at least tried to keep a bit of structure around it.

Minimal External Hardware

For this project, I need to minimize the amount of “stuff” required to make this work. At a minimum, I know I already need the following:

- A TV: You were already trying to use it for a dynamic map.

- A Computer: Needed to run the virtual tabletop system (VTT)

- Minis: Physical tokens to put on the map

This means I want to avoid if possible the following:

- External Cameras: These require some sort of mounting solution and power supply, and can be pricy.

- Frames: This is anything that attaches to the TV. These take up space and may require some form of careful alignment.

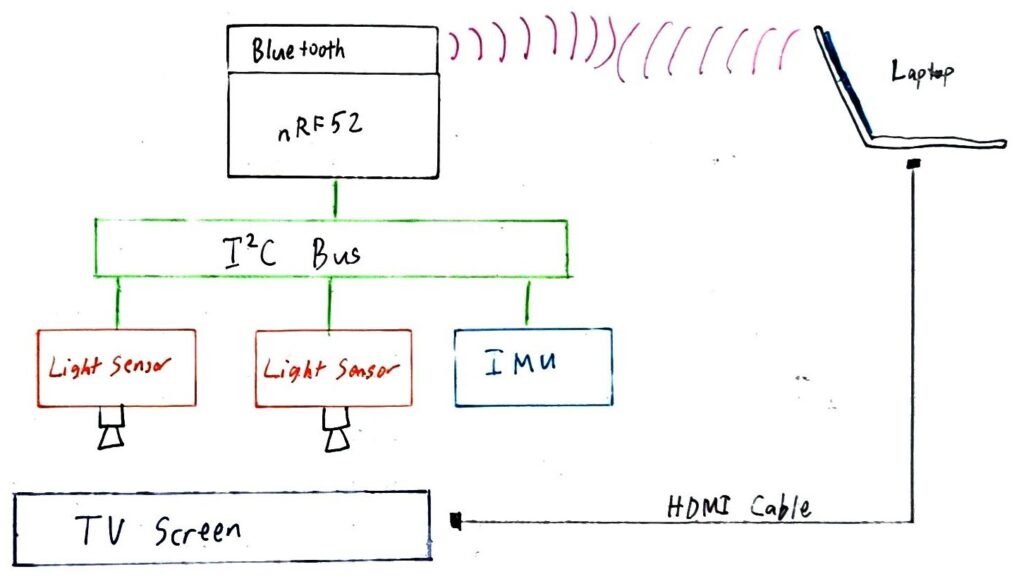

- Bridging Devices: This would be any device that sits to the side which acts as a way for any remote devices to connect to the computer running the VTT.

Size

This has been one of the more difficult constraints and also is a heavy driver of the following “Power” constraint. Miniature bases are approximately 1 inch in diameter, and standard grid sizes are 1 inch. Since we are working with a map on a TV screen, technically we can expand the grid (for example: make it a 2-inch grid). However, that causes problems like significantly reducing the amount of map which can be displayed at any given time. I also think bases that are much bigger than the size of the mini would look sloppy and from laying out the components I believe I can fit everything with that 1-inch diameter.

Power

Running any cable to the mini bases would violate the first constraint (Minimal External Hardware). Therefore, any power needed by the base has to come from a battery. Unless I want the base to be really tall (multiple inches), the only viable power source is coin cell batteries.

Coin cells have limited capacity, but even more limiting is how much current they can source at any moment in time. In general, a CR2032 (the one that looks like a quarter) can only source a continuous current of 15 milliamps. For reference, many small LED lights consume roughly that amount. This means we have to power all our sensors and our microcontroller on that limited amount of power! Since we aren’t running cables back to the computer, whatever we use for wireless communication also needs to fit within this power budget.

Cost

While I don’t yet know if I will try to commercialize this, I wanted to try to design it in case I do. Making 1 or 2 of a thing is a lot different than making thousands of a thing and they have very different cost structures. If my bill of materials gets too expensive, then after I add in manufacturing costs and profit margin, it could end up too expensive and no one would be willing to buy it.

This means I probably can’t pick the biggest and baddest microcontroller, the best on the market inertial measurement unit, or the fanciest high-resolution camera. Instead, I need to pick “good enough” components that satisfy my requirements without providing excess capacity that doesn’t add value.

Engineering is all about resource allocation. Anyone can build a bridge, but only an engineer can build one that barely stands.

Hardware So Far

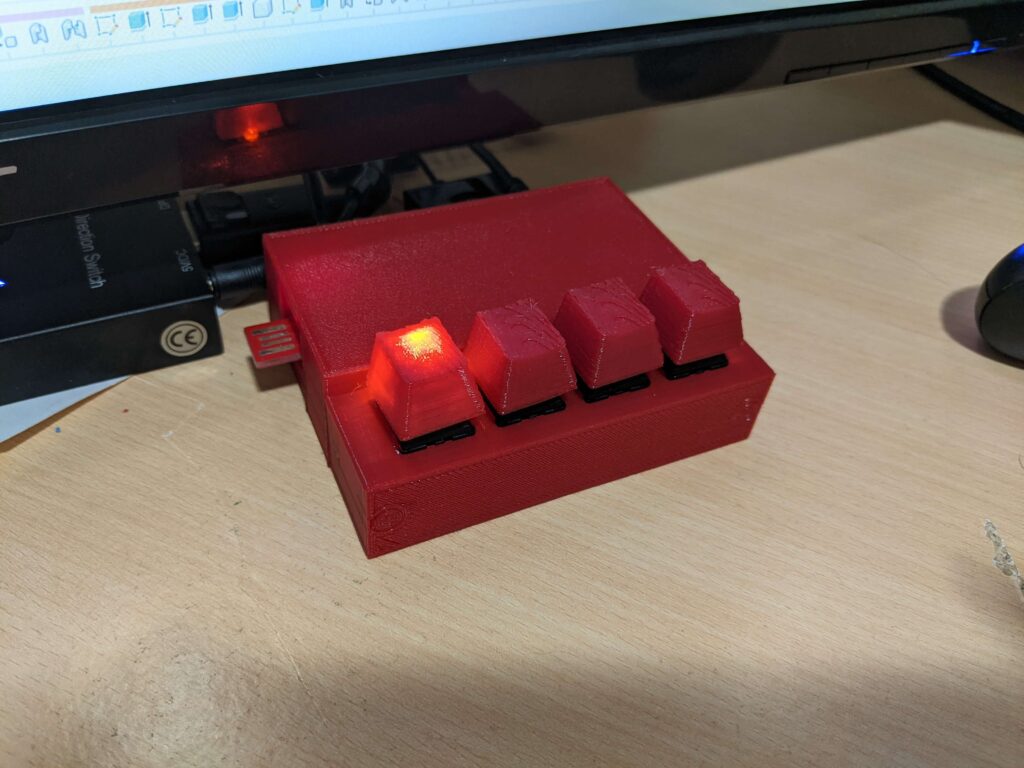

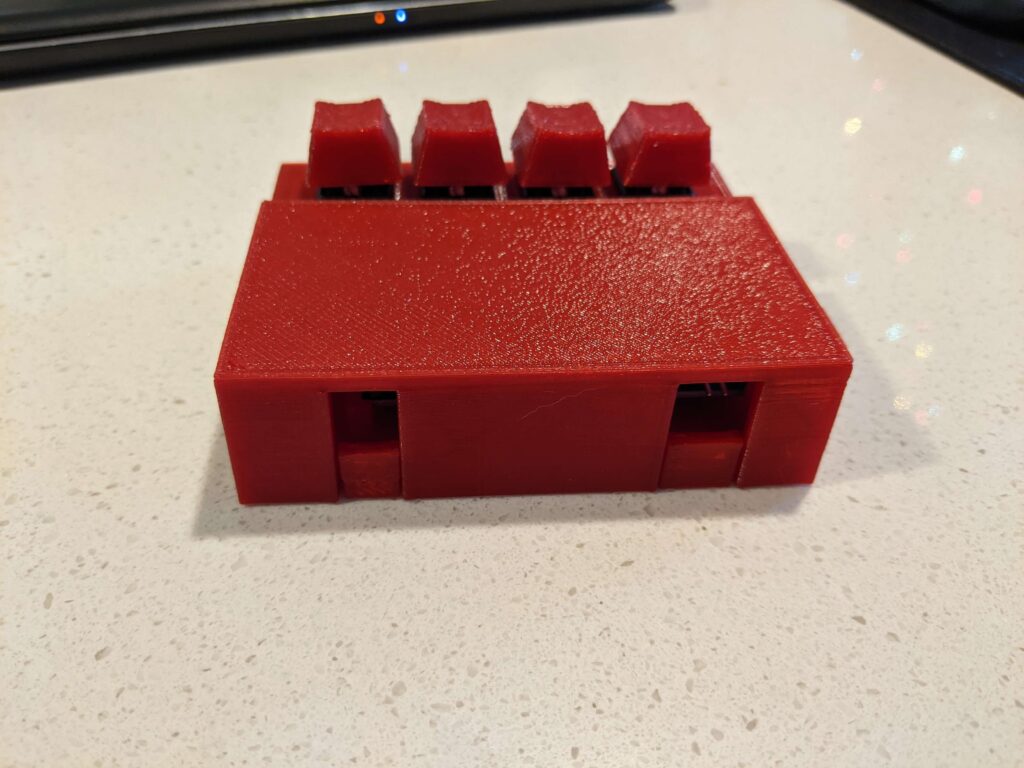

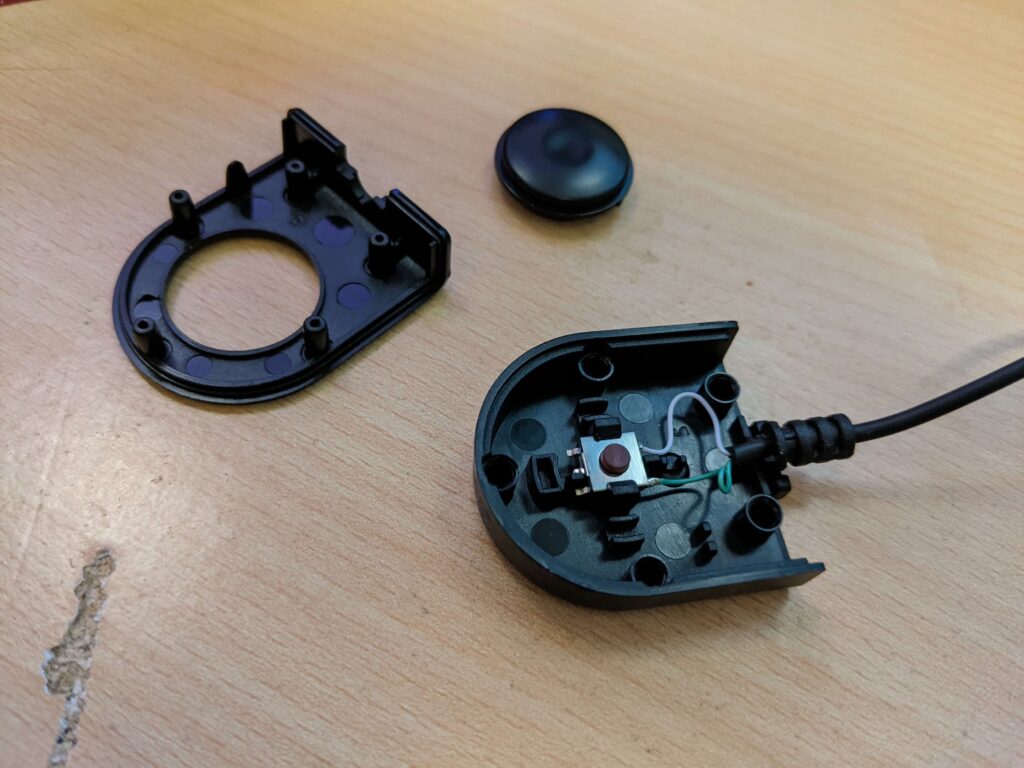

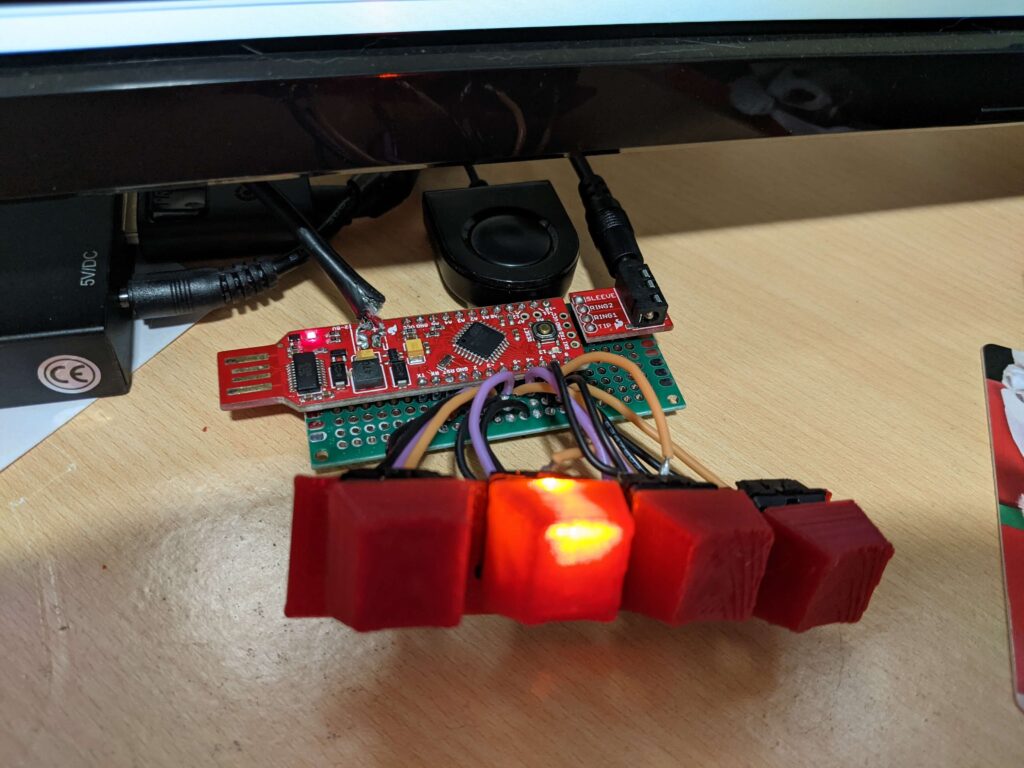

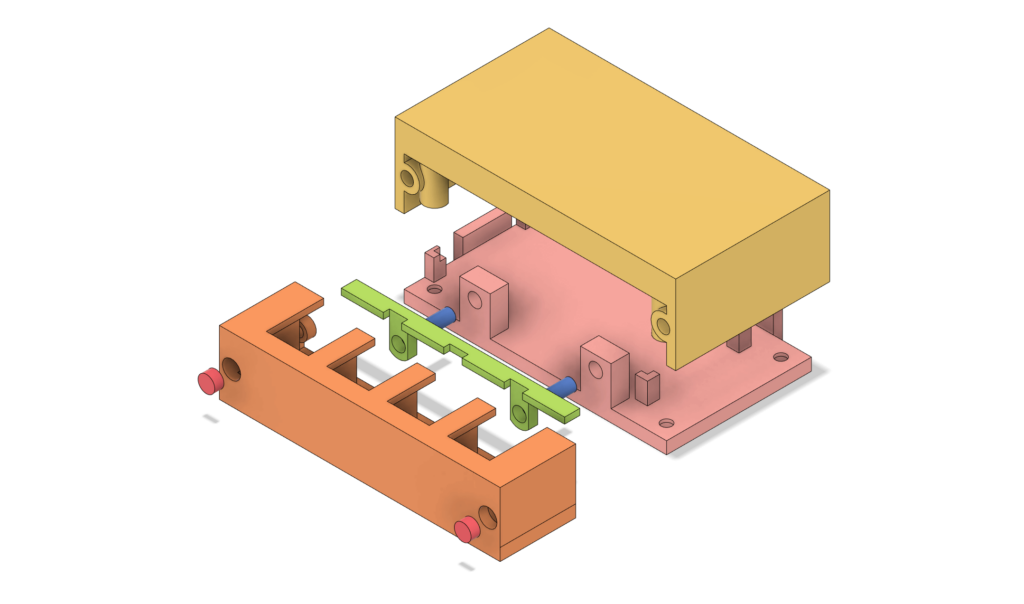

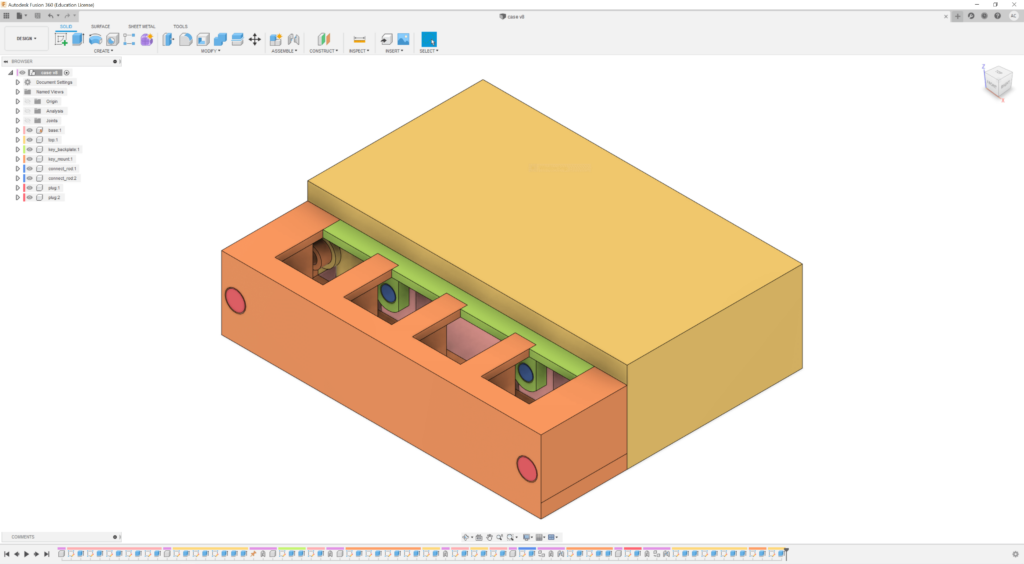

I have prototyped out some hardware which is currently able to implement what I called the “Duck Hunt” solution from part 1. It is all built into a base that the mini would sit on.

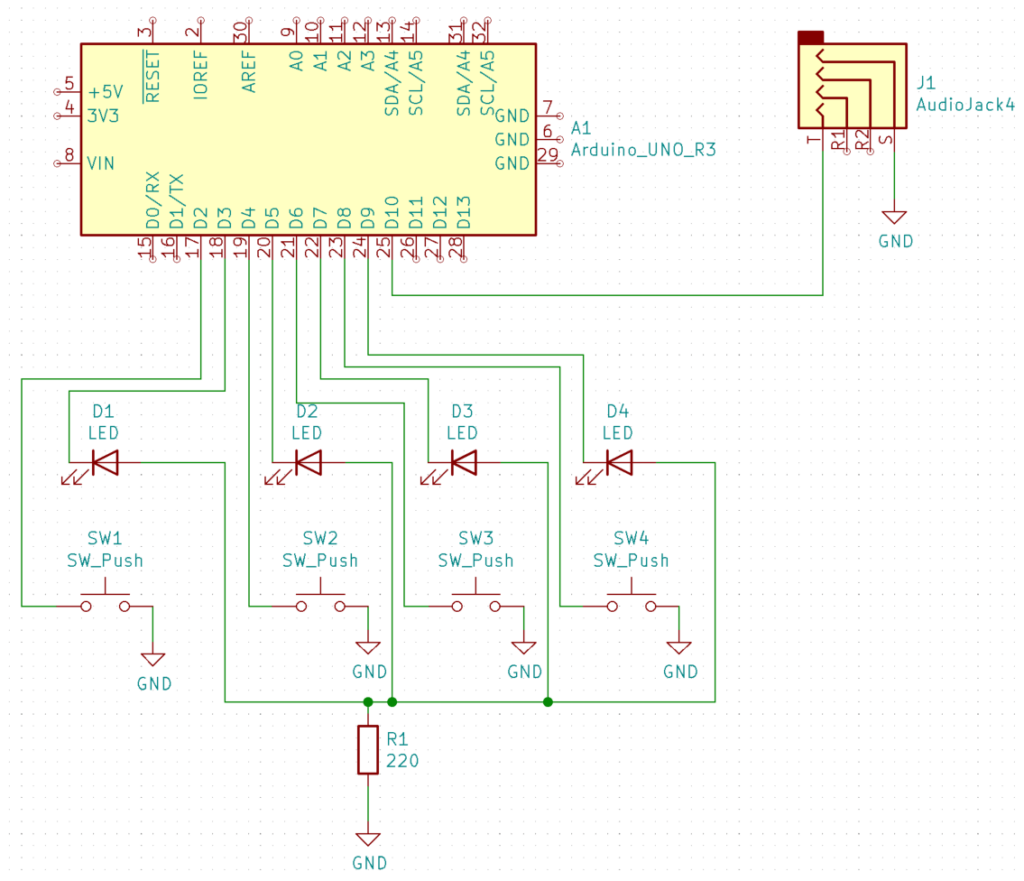

The major components are:

- nRF52: This is the microcontroller that is responsible for reading the sensors and communicating with the computer via Bluetooth

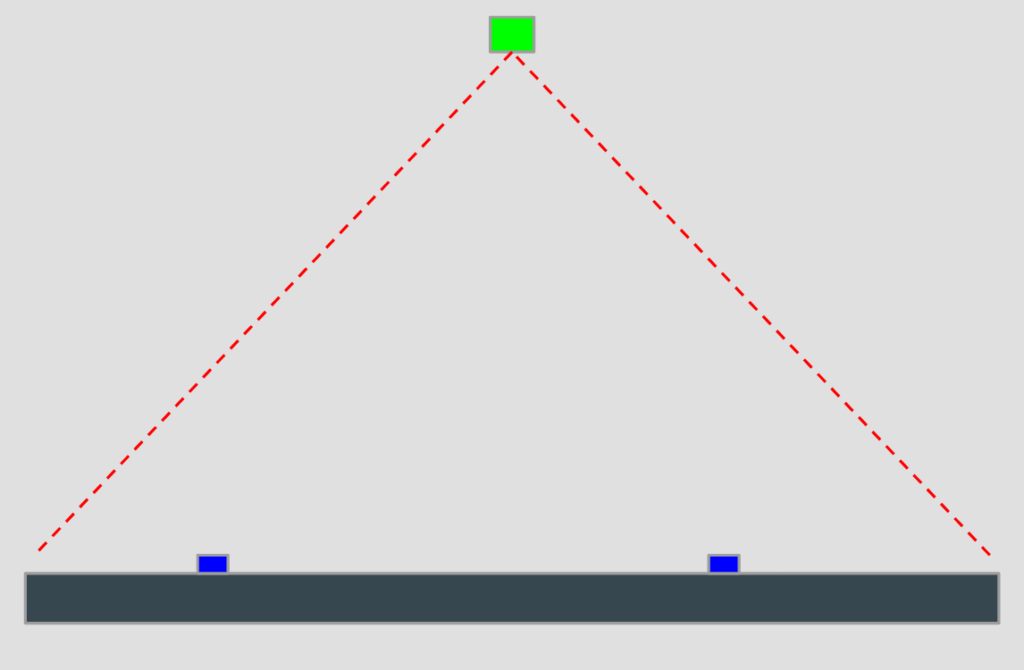

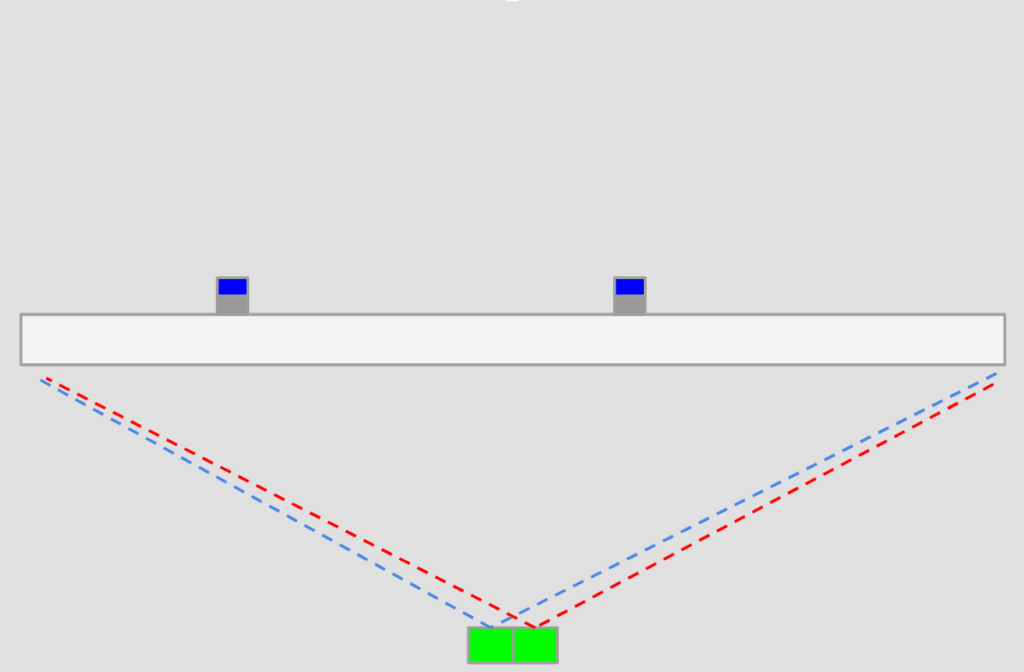

- OPT4001: Two of these act as light sensors that watch the screen for shifts in brightness. They are not sensitive to any particular wavelength (they read general brightness, not specific colors). Why two? The idea is by putting some sort of gradient on the screen I can use the different readings between the sensors to determine rotation.

- LSM6DSO: A six-axis inertial measurement unit (IMU). It includes a 3-axis accelerometer and a 3-axis gyroscope. This sensor is intended to detect pickup and placement of the mini base, as well as approximate its location in space while it is being held/moved (more on this below).

This current solution aligns with the above constraints

- Minimal External Hardware: The only additional hardware is the base which attaches to the bottom of the mini.

- Size: Layouts of the selected components fit within a 1-inch diameter. The coin cell (CR2032) plus its holder are just under the 1-inch diameter. With a plastic case around the circuit board and battery, we might miss the 1-inch diameter by a few millimeters.

- Power: Powered from a CR2032 coin cell battery. With how low-powered the nRF52 is, I think it may be able to sustain weeks of active use (and months of standby time).

- Cost: The current bill of materials is roughly $10 for each when building in batches of 100.

Certifications

Something I didn’t think about needing for this project is a wireless certification. It makes sense though, we are emitting radio energy and regulatory bodies such as the FCC have opinions on that. This is only an issue if I intend to sell these, not if I was only making these for myself.

Certification is expensive! Since we are using a radio, this device would be classified as an “intentional radiator”. I’ve seen estimates anywhere in the range of $10k to $100k to get these kinds of devices certified, which would be backbreaking for a project of this small scale. There are some carve-outs for small-scale production, which allows you to see if there is market fit before paying for a full certification, but overall this is a big hassle and expense.

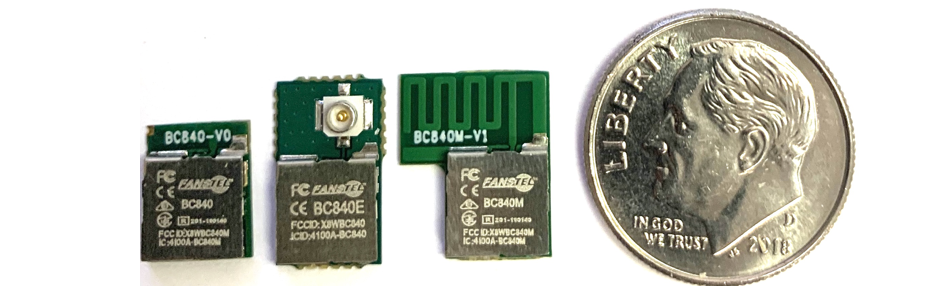

There is however a remedy for this. If I instead use a pre-certified radio “module”, then I only need to get certified as an “unintentional radiator”, which is much cheaper, in the range of $1-2k. For this project, I’m using an nRF52 which has Bluetooth radio functionality built in. If I buy a precertified module I’ll pay a little more per unit, but not have to design my own antenna or go through the much more stringent and much more expensive intentional radiator certification process.

Unforseen “Hard” Problems

With the “duck hunt” approach, every time you move or place the mini base you will trigger a location update routine. This is where we get the screen flashing shown in the demo above. While the intensity of the flashes can hopefully be minimized, it will still likely cause some amount of flicker. That update will happen on every move, regardless of how small or simple it is.

To solve this, I figured why not include an inertial measurement unit (IMU). I already needed some way to detect that a mini base had been moved to trigger a location update and an IMU is a great fit for that task. If an IMU already measures acceleration and rotation, how hard could it be to have it use that data to estimate the new position of the mini base? Then I could do a “guess and check” where I flash only under the mini (to see if the estimate was correct). If the estimate is incorrect then worst case I fall back to flashing the whole screen. Easy right?

WRONG! After playing with the IMU and some test code, I began to suspect that I might have stumbled into a legitimately hard problem. The issue I kept seeing, regardless of how I tried to correct for it, was that my IMU would build up positional error rapidly. Within seconds it would think it was somehow meters away, even if it was physically not moving.

The following video does a really good job of explaining the problem I stumbled into (23ish minutes in, the section on double integration):

If you don’t want to watch the above video, here is a quick summary of the problem. An accelerometer measures acceleration, not position. To get the position, you:

- Take the acceleration and multiply it by the difference in time since your last measurement, then add that to your previous velocity, giving you your current velocity.

- Take your current velocity and multiply by the time difference again, which will give the distance you have moved since your last measurement. Adding that to your previous position gives you your current position.

At every step, any error you have gets magnified. Additionally, the accelerometer measures acceleration, which includes acceleration due to gravity. If you have any error in removing the gravity vector from your measurements, your position estimates will be wildly off.

An Opportunity for a Rabbit Hole

Not being able to interpret the IMU data to determine our position directly is a big bummer. If this was straight impossible, we could just walk away. However, I found a bunch of fairly recent research discussing using machine learning (ML) to interpret the IMU and output corrected position information.

Here is a link dump of several examples of using ML to interpret and correct IMU data:

- https://github.com/mbrossar/ai-imu-dr

- Claims an impressive 1.1% translational error after several hundred meters

- https://yanhangpublic.github.io/ridi/index.html

- Uses models of human motions to correct IMU data for localization from only a 6 degree of freedom (DoF) IMU

- https://ronin.cs.sfu.ca/

- Update to RIDI (above link)

- https://github.com/jpsml/6-DOF-Inertial-Odometry

- Learning framework for using 6 DoF IMU data with a microcontroller for localization

Approaches like this are fascinating, but present a new problem: several require some form of ML models, which take a lot of computing and memory to run. Microcontrollers are resource-constrained: they have limited processing power, memory, and even storage. So is it even possible to use these approaches? Yes! There is something called TinyML/Tensorflow lite for microcontrollers!

As long as your ML model isn’t too complex, it is possible to run it on the microcontroller directly. Here are some books focused on TinyML:

- TinyML [Book]

- TinyML Cookbook – Second Edition [Book]

The siren call is strong, but I must remember that position estimation from an IMU is not required for a minimally viable product, so for now I’m going to let this go.

Conclusion

Congrats on reading this far! I hope you enjoyed this update (and if you did, consider sharing it with a friend!). Be sure to keep an eye out for part 3 (which should be the current state of the project). If you are interested in the code I’ve been generating while playing with this, check it out at: https://github.com/anichno/tabletop-mini-tracker.