Contents

Introduction

I don’t know about you, but I have a lot of “stuff” just sitting in bins, especially around my project space. This stuff is largely a collection of tools and parts for projects I’ve done in the past. This even includes parts reclaimed from abandoned/incomplete projects, as well as the tools I may have purchased to help myself work on them. This stuff is potentially useful though and expensive enough that I’m not willing to clean it all out. I’ve also found that having parts accessible helps reduce “friction” when working on something new. If I already have the parts, its much easier to play with a new idea or concept. Amazon 2-day shipping is fast, but it’s not “open a drawer or box” fast.

Problem Statement

With all this stuff lying around, it’s hard to know what I have. Even worse, I might know I have a particular part or tool, but no idea where it actually is! Wouldn’t it be cool if I had a way to search for the stuff I have, as well as have that system tell me where it is?

Playing with a solution

To begin, I started just creating notes in Obsidian. For each item, I took a photo and then linked it to the note. Then I gave it a name and a text description. That way, I could “search” on keywords and get a picture to compare against what I thought I was looking for. For organization, I could sort the notes into folders (example: Project Room -> Closet -> Bin 1 -> test_probe.md).

As a basic approach, this actually worked, but there is a lot of overhead. The process currently looks like this:

- Take a picture of the item.

- Create a note in the appropriate folder structure.

- Give the item a name.

- Give the item a description.

- Link photo to item.

Other than the problem of me just being plain lazy and the above being a lot of work, I have another problem: What if when I search, I can’t come up with the correct text?

Semantic search to the rescue!

Instead of searching based on literal text (lexical search), what if we could instead search on concepts and meaning? This is where semantic search comes in. Semantic search attempts to find results by considering how semantically similar (how close in meaning) your query is to all the possible results. Computerphile has a really good explainer of the core of this, vector embeddings:

In short, by looking at how words are used in lots of text examples, we can model how similar they are to other words. In practice, these words (or even phrases) can be placed into a highly dimensional space, where each dimension can represent things like “how catlike vs doglike”, “how fancy”, or “how male or female” (these are examples, but a machine learning system “learns” how it wants to use its dimensions based on input data and its evaluation function, and are likely not as simple as my examples).

To use this in search, it’s really “easy”, all you need to do is:

- Use an embedding model to encode the names and descriptions into a highly dimensional space

- Store that embedding in a vector database (in practice, an embedding is just a series of floating point numbers)

- Generate an embedding for any query we would like to do

- Search the vector database for the nearest neighbors of that query

Standing on the shoulders of giants

Luckily, tools for the above exist. Here are the ones I used:

- FastEmbed

- For embeddings, I used FastEmbed. Specifically, I used this implementation since it is a Rust-based implementation and I wrote my web app in Rust. This library can be used to generate the necessary embeddings for my descriptions as well as my queries.

- Mxbai-embed-large-v1

- I picked this model somewhat arbitrarily. It seems to be ranked well on embedding leaderboards and I had plenty of RAM to run it locally (approx 1.25 GB).

- Sqlite-vec

- This provides a Sqlite extension that can do the necessary vector searches. What is nice is that Sqlite gets built into your application, so you don’t need to manage a whole separate database application. Instead, you have Sqlite as a library and a single file which is the state of the database.

New (old) problem, I’m lazy

Semantic search is cool and all, but do I really have to write all those names and descriptions? The above approach to data ingest is going to be way too burdensome, involving lots of time and decision-making per item. However, you know who will happily give me names and descriptions for money? OpenAI! (or any other large language model (LLM) provider with models which can process images)

Given an image as context, the LLM query looks like:

- System Prompt: “You are a helpful item identifier and described. You always respond in valid JSON.”

- “Please give a short name for this object.”

- “Please give a full description of what you see in this image. Do not mention the background or any human hands.” (If I was holding something like a USB cable, the LLMs really liked to tell me about my hands)

Pro tip: You can constrain output to valid JSON, just provide a JSON schema and instruction in the system prompt (https://platform.openai.com/docs/guides/structured-outputs)

Inputting new items now looks like this:

- User: Take pictures of items against a simple background.

- User: Zip them all up and tell the inventory system which container they go to.

- Program: Let OpenAI give each item a name and description.

- Program: Embeddings are generated from names and descriptions, then stored in the database.

Then we can query the database based on semantic meaning instead of keywords.

Oh no, Anthony got really obsessed and made a web app

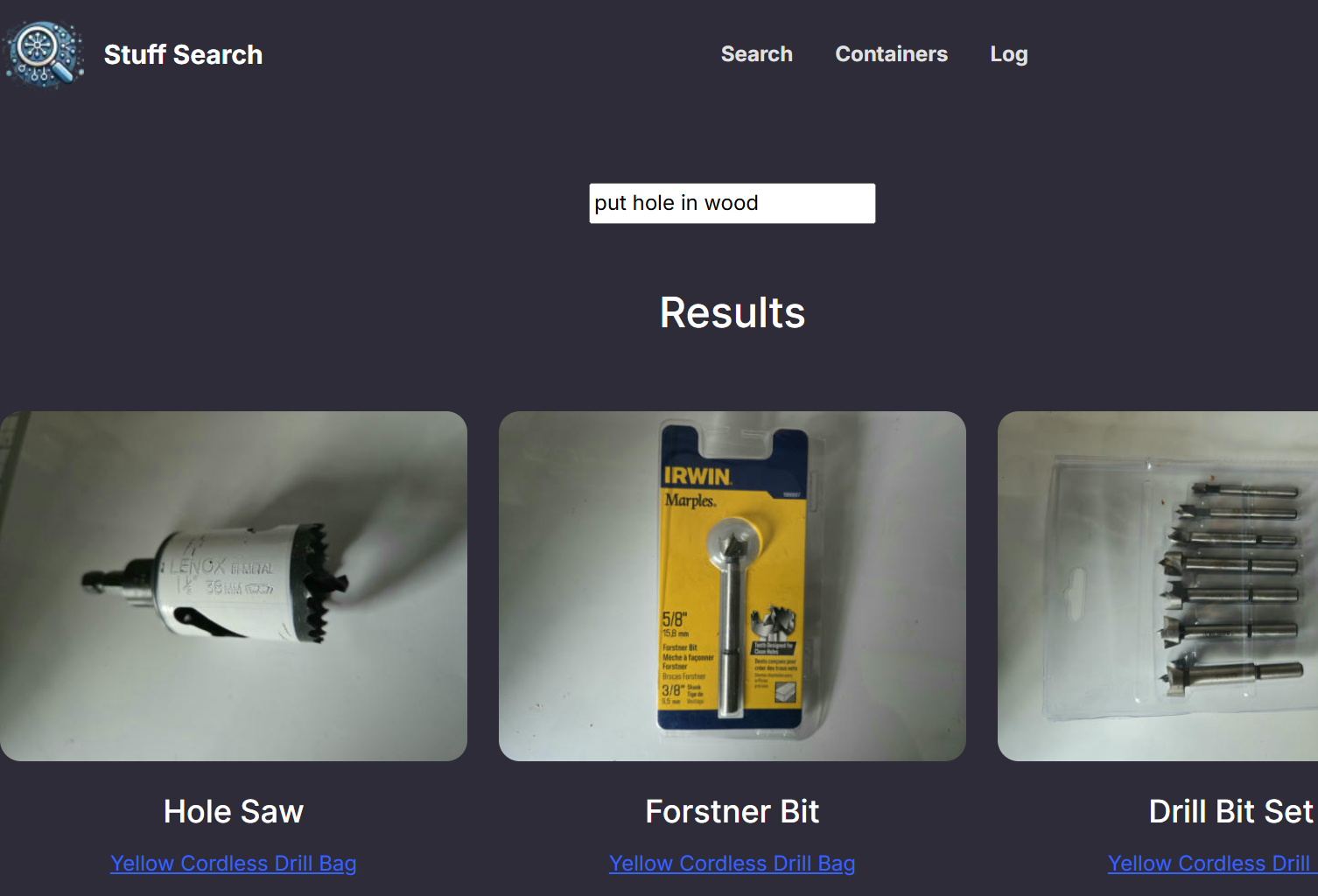

The following videos demonstrate some of the functionality and what this app looks like at the time of writing.

First, we do a search for the term “programmer”. Note how you get results such as tools that help you program things. You also get stuff with the terms “developer” and “development” which are conceptually similar to “programmer.

Next is an example of searching based on the concept of “something to put small things in”. The results include several small pouches which could be used to store small items.

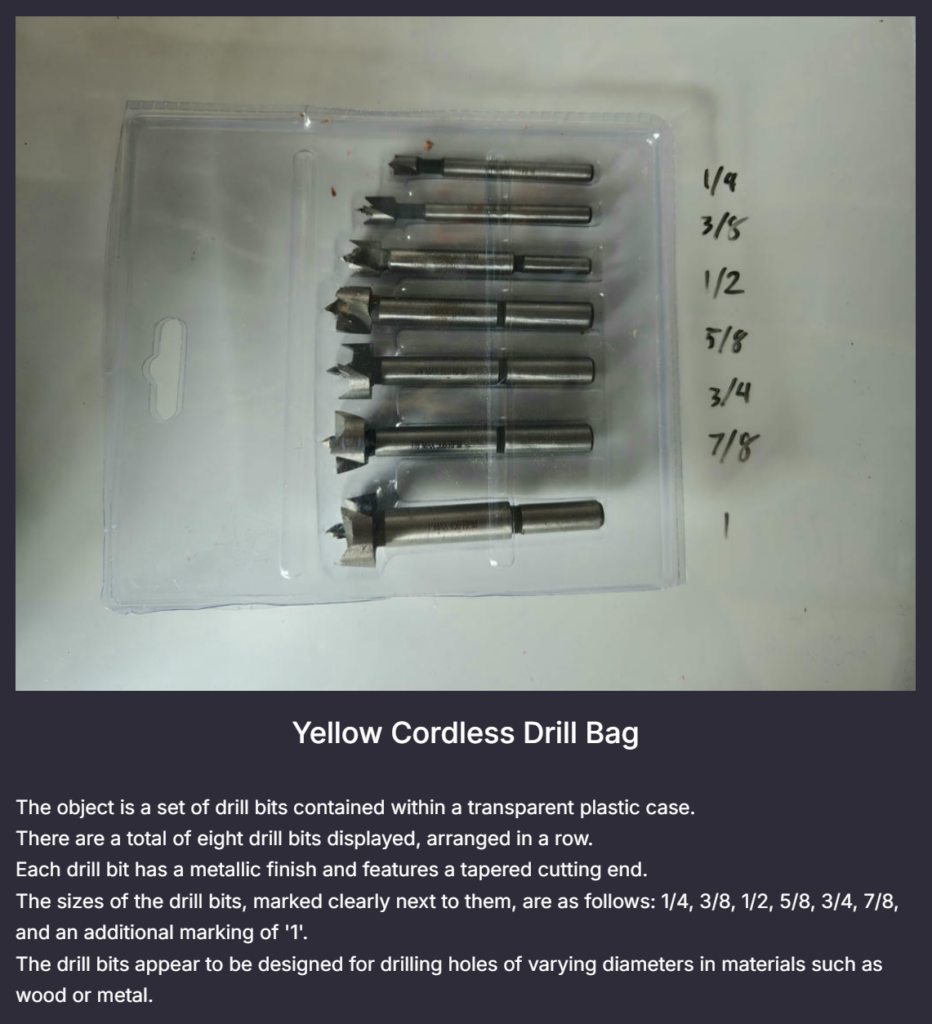

For the last search demo, we search for “hole in wood”. As expected, we got multiple drill bits and wood screws. Note how the description for “Drill Bit Set” does not contain the words “wood” or “hole”.

Because the LLM is doing its best to read all text it sees, we can make sure some makes it into the description by writing it off to the side on a whiteboard:

Finally, I wanted to show the “containers” page. It lets you browse your containers and move items around via drag and drop.

Web app tech stack

As mentioned before, the database implementation is Sqlite-vec. The backend code uses Axum, which is a Rust web framework. CSS/styling is done with Bootstrap 5. HTML templating was provided by Minijinja.

It’s been a few years since I’ve last made a web app and I was NOT excited to have to relearn a framework like Angular or VueJS. However, I decided to finally give HTMX a try and that was an absolutely lovely experience. I recommend giving their essays a read and the library a look. In short, you get extra HTML tags you can put on elements, which give them a lot more dynamic functionality. Using it I had a very responsive, modern-feeling web app with very little effort.

I also wanted to shout out Bootstrap Studio, which provides a really nice WYSIWYG editor for web pages. I’m not very familiar with the ins and outs of Bootstrap and CSS in general, so being able to play with the look and feel in the editor was very helpful. While my app isn’t the most impressive visually, I think it helped me get to something functional pretty quickly.

Problems and Pitfalls

Even with the automation, it is still a non-zero amount of work to go through and inventory my stuff. I’m hoping it’s a low enough effort though that I will continue to use and update the system. Because there is a level of friction with organizing stuff managed by this app, I figure this is more useful when used as an “archival” solution. If you are taking items in and out frequently, you should probably consider a different way of doing this.

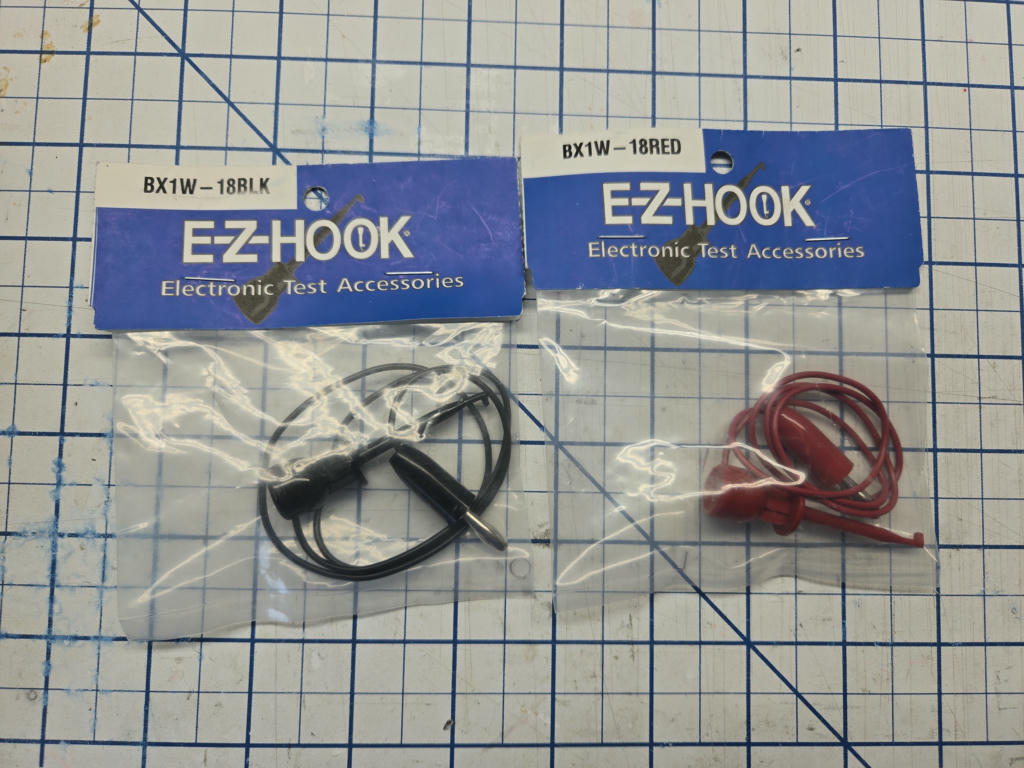

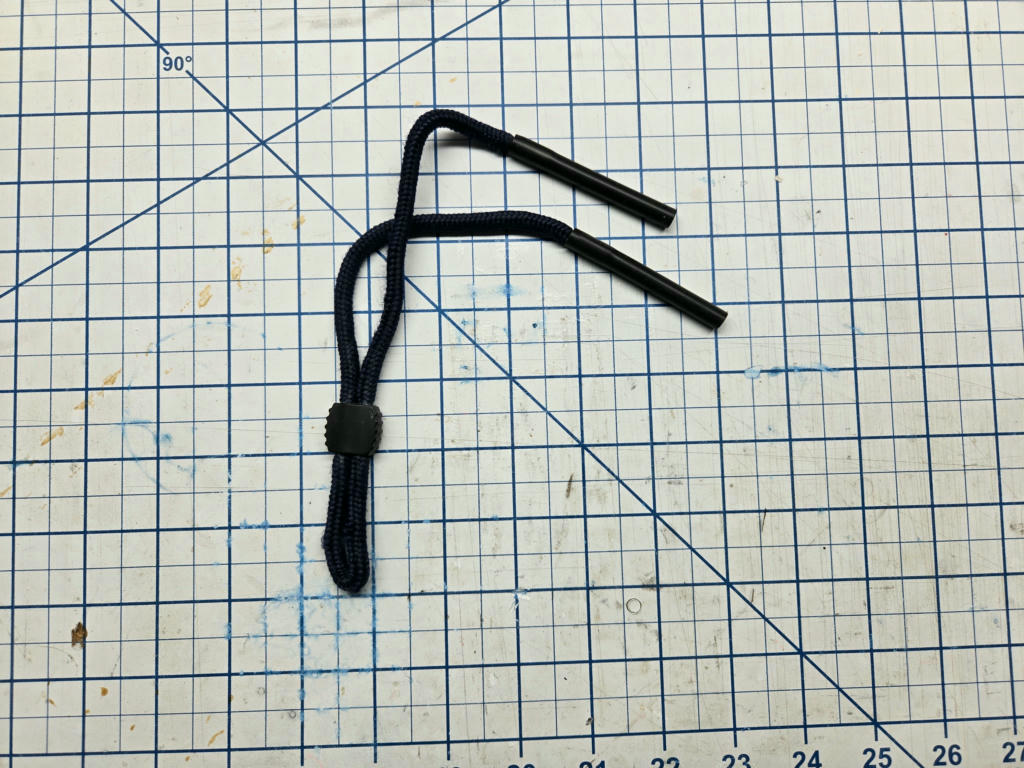

While the AI approach is super useful for data entry, it faces limitations as well. The most obvious problem is it doesn’t always know what it is looking at. I find this fair; when given no context and handed the following, would you be able to identify them?

While fair that the system fails to identify certain items, it also fails to tell me it doesn’t know what it’s looking at (maybe this could be fixed with prompt engineering, but this is a general LLM issue). This means there is still a level of manual review necessary.

Lastly, the age-old issue with inventory systems: how do I keep it up to date? As I pull things from boxes and put new things in, if I don’t keep updating the web app those items will get lost. It’s almost probably worse since if the bin is mostly in the inventory system, I won’t realize there are non-inventoried items in there. I think the best way to deal with this is just a periodic review (every year?) where I attest that the state of a container is still correct or make adjustments as necessary.

Potential future work

While I am trying to “lock” development on this project and just use it (otherwise projects have a bad tendency to just never be finished), there are a few improvements I would like to consider in the future.

- Clustering: Part of the value of generating vector embeddings for these items is they can be represented in a highly dimensional space where similar items would theoretically be close to one another. By identifying similar items, the system could propose new ways to sort stuff that puts similar items into the same or nearby containers.

- Reranking: In normal “retrieval augmented generation” (RAG) pipelines, reranking is a way to take a large number of initial results from the embedding search and “rerank” them such that only the most relevant ones are actually fed to the LLM that is processing your overall query. In this application, it could be useful to let the initial embedding query pull back a lot of candidate items and then pass it through a reranker to improve the quality of search results.

- Better chunking strategies: For the web app, I make several chunks of text and embed them separately. Better strategies may lead to better results. For reference, here is how I chunked at the time of writing:

- Name only

- Name + full description

- Each line in the description (acting as a series of statements)

- Bring in an LLM: As currently implemented, an actual LLM is never used in the query process; it is only used for data ingest. There may be an advantage to doing the query, and then asking the LLM which is the best result. After validating that the result actually exists and wasn’t made up, we might have a powerful search tool. I do see an issue with this purely from a query time perspective. Search tools like this are expected to be fast, and the longer it takes to return the results the worse the experience is.

- Photo segmentation: Instead of taking one photo per item, you could take one photo of multiple items and then segment the photo into one photo per item. With a simple white background this could be pretty easy, but you’d have to handle the edge cases like where an item is really a collection of small parts that might be slightly separated from one another. If it was sufficiently accurate, this could reduce the overhead for getting large groups of items into the database.

- Integration with an existing inventory system: There are other inventory management systems that are much more fully fleshed out than this one. Building a plugin for another system such as Inventree might be a better long-term solution.

Conclusion

As of writing this, I’ve actually been using this for a few weeks with a few containers inventoried. Already it has been useful, with me using it to find which bin had some magnets I was looking for. The code is open source under an MIT license and can be found at: https://github.com/anichno/stuff-search. I’ve tried to include enough documentation there to get up and running, but just note that as of now the app will want a little over 1.25 GB to run (since it’s running that embedding model. Feel free to give it a try if you think it might be useful, otherwise it’s an example of how to do the things mentioned above.

Thanks for making it this far! Feel free to leave a comment or share it with anyone you know who might find these kinds of projects interesting.

Leave a Reply